Large Geospatial Models, OVRMaps and the Future of Physical AI

2025-08-05

What Are Physical AI and Large Geospatial Models?

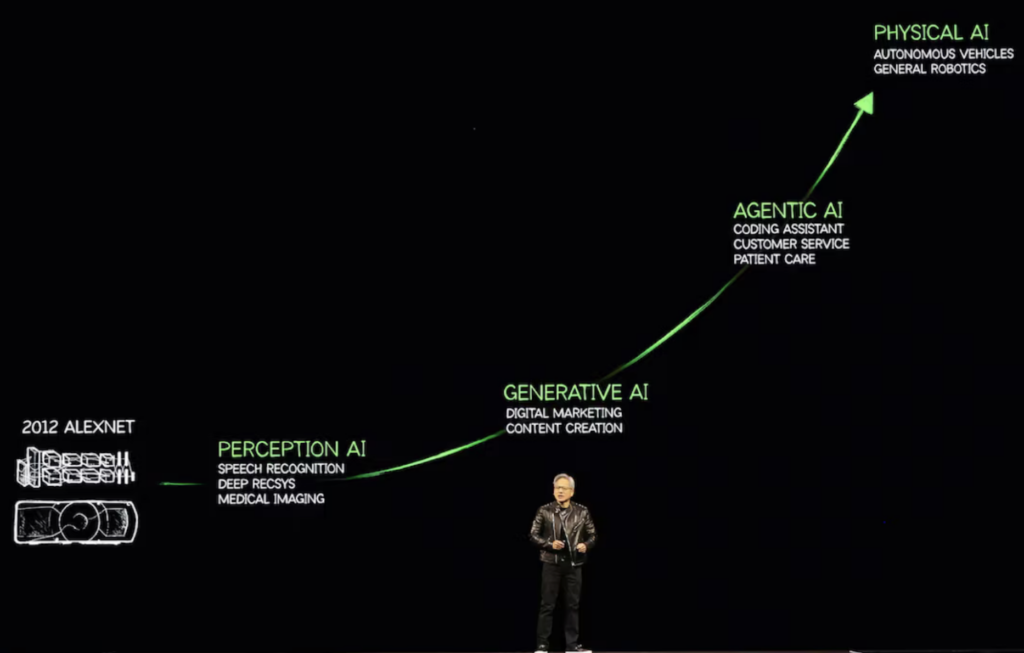

Since the launch of ChatGPT in November 2022, we have witnessed an explosion of interest in AI, and specifically in Large Language Models (LLMs), given their capability to understand and manipulate human language by ingesting internet-scale amounts of text. The capabilities of LLMs cannot be overstated, and in the near future, they will automate most white-collar jobs. But a new frontier is emerging: Physical AI. The vision behind this new paradigm is to go beyond language, empowering machines and robots to interact with and complete tasks in the physical world, fostering a new industrial revolution and an era of unprecedented abundance.

Jensen Huang, NVIDIA’s CEO, used a bold statement to describe this future during his March 2025 keynote: “Everything that moves will be autonomous.” This paints Physical AI as the next logical evolution of AI.

Empowering machines and robots to interact with the physical world requires a broad range of capabilities, starting with the understanding of the 3D space around them. There is a cognitive dissonance regarding how valuable and complex this capability is. Humans tend to downplay the importance of everything physical and overestimate everything that has to do with language. This bias is reflected and reinforced in society, where intellectual (white-collar) jobs commonly have higher value and command higher status than physical (blue-collar) jobs. Yet, if we look at evolution and the structure of our brain, the picture is very different. The area of our brain that controls language is small and evolutionarily recent. Considering this, it’s not surprising that machines can handle language so well. Language, unlike the physical world, is a human construct; it is purely generative and doesn’t exist in nature.

But vision is a totally different story. It has been shaped by millions of years of evolution. Every form of life that developed an eye had to solve the same hard problem: converting 2D images into a 3D predictive reconstruction of the physical space around them in order to survive. This is what enables animals and humans to thrive in the physical world and effectively interact with it.

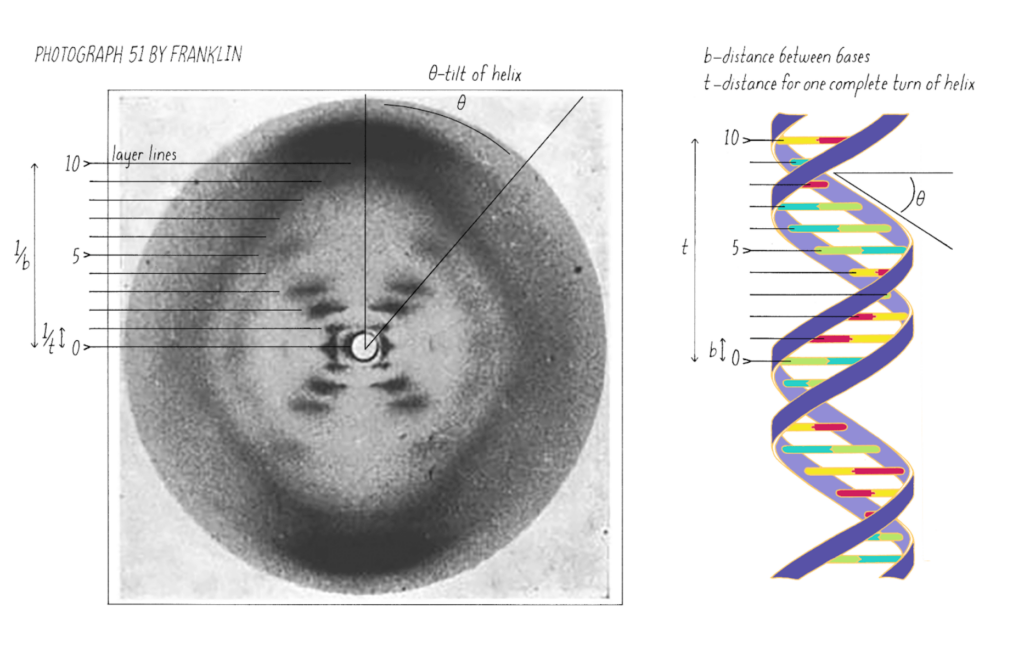

But it does not end here: the understanding of the 3D structure of our world is also what powers an important part of our reasoning capabilities. Creativity—across design, movie production, architecture, industrial design, and even science—is inherently visual, perceptual, and spatial. When Francis Crick and James Watson co-discovered the beautiful DNA double helix, they didn’t just reason through language; they were able to infer the DNA molecule’s 3D structure from X-ray diffraction patterns.

As stated by Fei-Fei Li, language is a “lossy way to capture the 3D physical world.” If you are blindfolded in a room, a linguistic description is insufficient for task execution. However, with sight, the brain immediately reconstructs the 3D space in the mind’s eye, enabling efficient manipulation and interaction. The entire evolutionary history of animals is built upon perceptual and embodied intelligence, which humans further leverage to construct and change the world. This is what Spatial Intelligence is about, and Large Geospatial Models (LGMs) are tackling this fundamental capability: enabling machines to understand the 3D structure of the world.

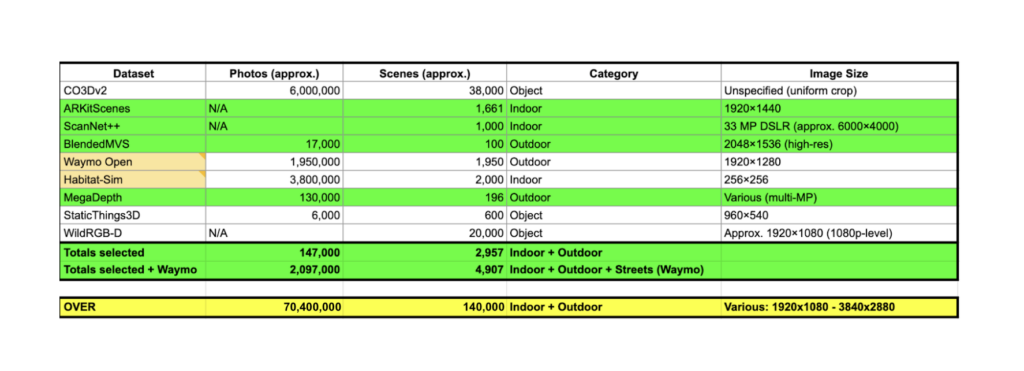

Just like our brain, an LGM can take a 2D view and create a full 3D representation, including what’s unseen. This means one can manipulate, move, measure, and stack objects within the computer, enabling applications in robotics, architecture, design, gaming, and even filling in missing data. Machine perception—the ability to reconstruct 3D representations from 2D inputs—is inherently a generative task. It’s about filling the informational gaps to map 2D data into a 3D space. LGMs need to extrapolate priors on the 3D structure of the world to achieve this goal. These models are trained using multiview images of objects and environments, and OVER has built one of the world’s largest datasets of such pictures, with over 130,000 locations mapped.

The primary application of LGMs is in robotics, which encompasses all embodied machines. These machines require understanding and training in 3D space to perform independent or collaborative tasks. The problem is fundamentally 3D because physics happens in 3D, and interaction happens in 3D. Navigating behind objects and composing the world physically or digitally all require a 3D understanding. While humans can reconstruct 3D from 2D video, a computer program or robot needs explicit 3D information to perform spatial tasks like measuring distance or grabbing objects. LGMs bridge this gap.

But the applications of LGMs do not stop here. If you can fill the gaps between 2D and 3D space, you can also generate infinite, coherent worlds. Early experiments of this capability can be seen with models trained by companies like Odyssey. Applications in gaming, the metaverse, and simulation are virtually endless.

The Role of OVRMaps in Training LGMs

Let’s go back to the high-level task accomplished by LGMs: inferring 3D from 2D inputs. How can such a capability be achieved? Just as LLMs are trained by masking words in a sentence and learning to predict the missing part, LGMs are trained on multiview images of objects and spaces to extrapolate priors on the 3D structure of the world. From a frontal view of a table, the model needs to learn to predict how that object is structured on the backside, even without additional views.

The raw data used to create OVRMaps—multiview images of locations and objects—is the exact kind of data needed to train LGMs, and our dataset is massive. Ilya Sutskever, co-founder of OpenAI, once famously said, “Models just want to learn.” The implication is that if you give them enough data, they will extrapolate its inner structure, unlocking emergent capabilities that seemed unthinkable. This has been empirically demonstrated with language and LLMs. Leveraging the OVRMaps dataset to train LGMs will allow the field to move far beyond current capabilities.

Here below, a comparative chart on the size of the datasets used to train a state of the art LGM like DUST3r compared with the size of OVER’s dataset

Join our mapping community to become part of the Physical AI revolution

Start mapping: https://link.ovr.ai/download